Navigating the Risks of GenAI

Generative AI is one of the most transformative technologies of our time, reshaping industries from healthcare to finance. As a UX leader with 25 years of experience in design strategy, digital transformation, and AI-driven innovation, I’ve seen firsthand how AI-driven experiences can both unlock new value and introduce significant risks.

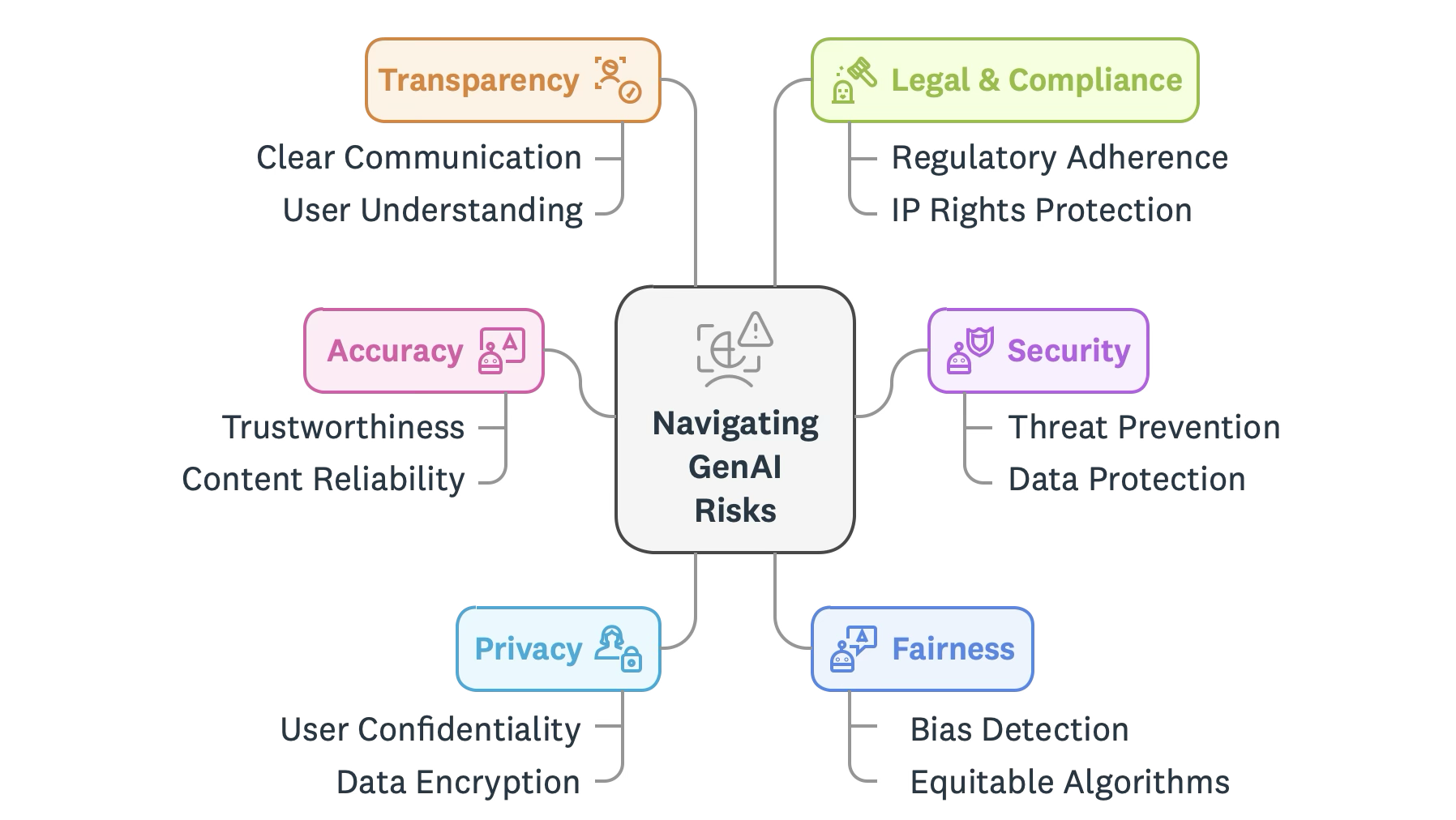

Over the last two years working at the bleeding edge for my clients, I’ve outlined six critical risks heightened by Generative AI—ranging from accuracy issues to legal concerns. As designers, strategists, and business leaders, we must not only understand these risks but also design safeguards to mitigate them.

Let’s break down these six risks and explore how UX design can play a critical role in responsible AI adoption.

1. Transparency: Building User Trust

Risk:

AI systems often operate as black boxes, leading to mistrust when users don’t understand how decisions are made.

How UX Can Help:

AI disclosures: Always inform users when they’re interacting with AI (vs. a human).

Trackable AI outputs: Allow users to see past AI-generated responses and provide feedback.

Explainability features: Use visual indicators, citations, and confidence scores to build trust.

Example:

At Docker, we worked on AI-driven developer tools. Users were skeptical of auto-generated code, so we designed “confidence indicators” that showed how reliable a suggestion was based on real-world usage.

2. Accuracy: Designing for Trust

Risk:

AI models can “hallucinate,” producing maGartnerde-up responses that seem credible but are factually incorrect. This poses a major challenge, especially in high-stakes industries like finance, healthcare, and legal services.

How UX Can Help:

Human-in-the-loop systems: AI-generated insights should always be verified by experts before being presented as truth.

Confidence scoring & transparency: Instead of presenting AI outputs as definitive, interfaces should indicate certainty levels or provide sources.

Smarter prompting: UX teams can integrate pre-designed prompts that improve accuracy before users even input queries.

Example:

At Gartner, we explored AI-driven recommendations for executives. However, ensuring factual accuracy was critical, so we designed confidence ratings and feedback loops that allowed users to verify and refine AI outputs.

3. Privacy: Protect Sensitive User Data

Risk:

AI systems often process confidential or personal data, leading to privacy risks if information is misused, stored incorrectly, or shared without consent.

How UX Can Help:

Privacy-by-design frameworks: Ensure that AI features only access necessary data and anonymize sensitive information.

Granular access controls: Allow users to control who sees their data and how it’s used.

Clear opt-in mechanisms: Users should have full transparency on when and how their data is shared with AI models.

Example:

In AI-driven healthcare platforms like Octave Bioscience, we prioritized patient consent models that gave users full control over their medical data while allowing AI to assist in decision-making.

4. Legal & Compliance: Avoid IP Violations

Risk:

Generative AI raises legal concerns around intellectual property (IP) and copyright violations—particularly when AI models are trained on protected content.

How UX Can Help:

IP attribution tools: Where possible, cite the sources used in AI-generated content.

Compliance safeguards: Implement usage tracking and contract enforcement mechanisms for AI-generated assets.

Clear legal guidelines for AI use: Provide terms of use that outline acceptable AI applications and responsibilities.

Example:

At McKinsey, we helped clients navigate AI compliance frameworks, ensuring that AI-assisted content creation aligned with legal standards and enterprise policies.

5. Security: Protect from Misuse and Manipulation

Risk:

Generative AI is susceptible to prompt hijacking, misinformation, and fraud. Malicious actors can manipulate models, leading to data breaches or misleading outputs.

How UX Can Help:

Designing robust guardrails: Implement input filtering to detect harmful or misleading prompts.

Adversarial testing: Before launching AI-powered features, test for misuse scenarios (e.g., attempts to extract confidential data).

AI behavior monitoring dashboards: Design tools for security teams to track and flag potential AI misuse in real time.

Example:

At McKinsey, we worked with enterprise clients to validate data integrity in AI-driven solutions. Proactive security design patterns helped prevent unauthorized access and ensured compliance with enterprise security standards.

6. Fairness: Combat Bias in AI Outputs

Risk:

Generative AI can amplify biases in training data, leading to unfair or discriminatory outcomes. If unchecked, this can reinforce systemic inequalities in hiring, lending, and healthcare.

How UX Can Help:

Diverse datasets: Work with AI teams to ensure training data represents all user demographics.

Bias testing in design reviews: Include AI fairness audits in the UX design process before launching features.

Explainable AI (XAI) principles: Give users insights into why AI made a particular decision and allow them to challenge outputs.

Example:

At Bloomberg, AI-powered financial tools risked reinforcing economic biases. By integrating bias-detection dashboards and manual review processes, we ensured AI recommendations were equitable across diverse user groups.

Designing for Intelligence and Responsibility

The AI revolution isn’t just about automation—it’s about augmentation. UX designers, strategists, and business leaders must take an active role in shaping AI’s accuracy, security, privacy, fairness, transparency, and legality.

What’s next for UX in an AI-first world?

AI-powered UX won’t replace designers, but it will redefine our role.

We must shift from screen-based interactions to designing AI-driven ecosystems.

Ethical AI is a design problem—one we must solve with intent.

As AI continues to evolve, the future belongs to those who align AI’s capabilities with human values. The next decade of UX design will be defined by those who can merge technology, strategy, and responsibility.

What do you see as the biggest risk (or opportunity) in AI-driven UX? How are you integrating AI ethics into your design process? Let’s connect and discuss!